NNAI Livescu sponsors undergraduate research projects on various topics, overseen by faculty and staff in the initiative.

Please note that this is a working version and it will be updated after the research project is completed.

By Chaya Manjeshwar, 3rd-year Human Biology and Society & Cognitive Science Major

Ethical and Clinical Considerations of AI in Health-Tech

INTRODUCTION

While AI’s introduction to healthcare has been slow, it has promised clinicians and patients faster diagnoses, greater efficiency and personalized care. AI tools are already embedded in the clinic workflow, giving clinicians one more data point to analyse before making a diagnosis. However, these tools have a darker side due to poor regulatory oversight, using unclear algorithms and biased data, which can lead to negative clinical outcomes and exacerbate systemic inequities.

In this primer, several cases will be examined, highlighting several different ethical pitfalls of AI implementation in healthcare, all of which ultimately highlight similar themes. Algorithmic performance and accuracy, implementation equity, input and output data quality and transparency are key factors in the considerations of these tools. This paper will explore how we can use medical AI tools in a less harmful way and create and maintain standards in research to ensure utmost care in creating equitable outcomes.

BACKGROUND

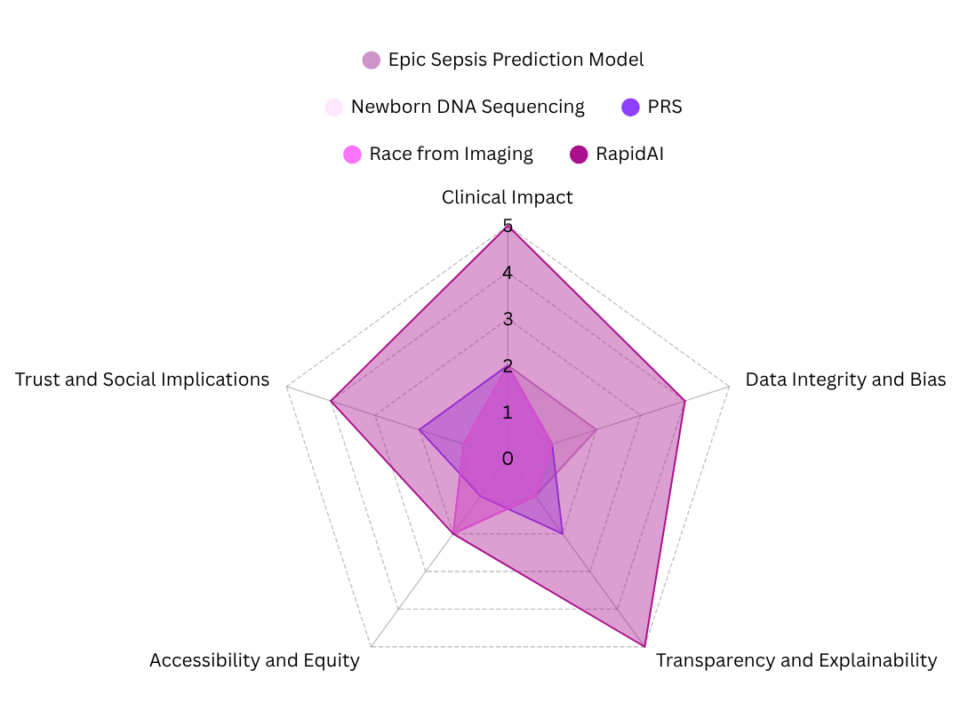

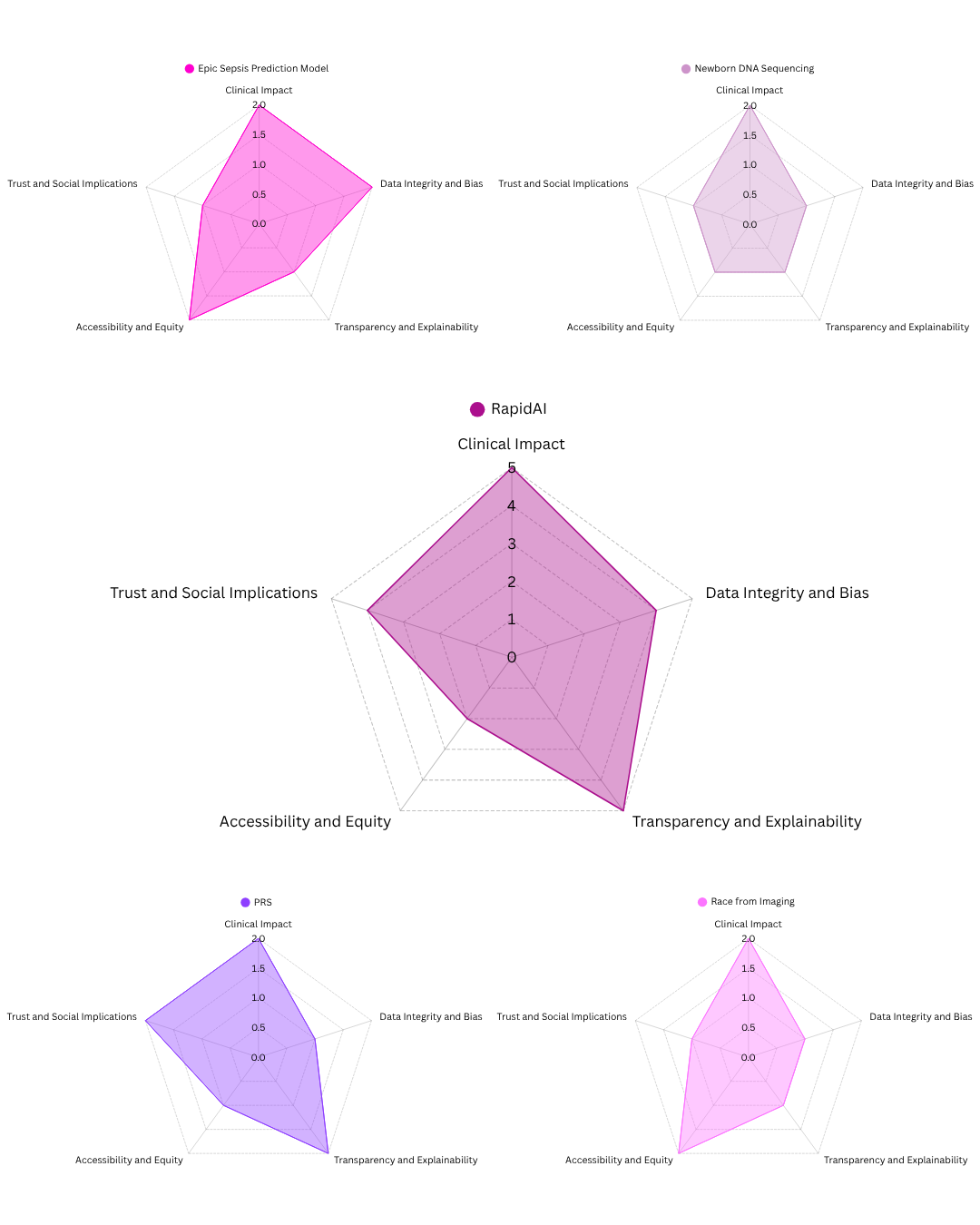

To analyze and assess the impact of AI tools in healthcare, this paper developed a series of criteria to create an evaluation framework. The criteria are loosely adapted from the California Initiative to Advance Precision Medicine’s plan to implement AI based tools in genomic medicine by prioritizing equity, transparency and accountability across all key steps (Precision Medicine: An Action Plan for California, n.d.). This framework aims to establish broad guidelines for implementing AI tools throughout real-life, focusing on technologies that could deeply alter the current public health sphere.

- Clinical Impact

- Data Integrity and Bias

- Transparency and Explainability

- Accessibility and Equity

- Trust and Social Implications

Clinical impact is defined by analysis that indicates the quality of the outcomes of the AI or algorithm, specifically following patient outcomes, accuracy, reliability and relevance to decision making. Data integrity and bias assess the quality of the training data, focusing on its diversity and the potential for the algorithm to replicate or exacerbate existing biases in society. Transparency and explainability highlights the ability or lack thereof for a clinician, patient or regulatory agency to understand the decision-making process that the tool undergoes when choosing a recommendation or making a judgement. Accessibility and equity relates to the cost and feasibility of expanding this technology on a large scale in an equitable manner. Trust and social implications relate to how this technology might affect public trust, the concept of institutional accountability and the consequences of integrating AI into healthcare systems.

CASE ANALYSES

- Epic’s Sepsis Prediction Model (Wong et al., 2021):

- Summary: An AI model developed by Epic Systems aimed to predict sepsis in hospitalized patients and was used across U.S. hospitals. Due to the complexity in testing and evaluating AI systems, this technology was not subject to proper external evaluation and validation.

- Clinical Impact:

- High false positive rate, low sensitivity in hospital deployment

- Model outcomes were very different than actual clinical outcomes

- Transparency and Explainability:

- Physicians and institutions cannot see the decision making process of the AI model

- Limited access to what is in the model due to the secrecy of the algorithm

- Trust:

- This tool was deployed at scale without external validation and caused harm to patients

- Raises concerns about overreliance on tools without proper external validation in patient care

AI is complex and it is still unknown how or why certain algorithms function in unexpected ways. Without rigorous external validation, transparency in delivery of recommendations or equitable safeguards, AI tools can create harm on a wide-scale. Premature integration into patient care can cause irreparable damage to patient care (Wong et al., 2021).

- Newborn DNA Sequencing and Genetic Privacy (ACLU) (Grant, 2023):

- Summary: Highlights ethical and legal risks tied to state-mandated newborn DNA sequencing, especially when AI is used to analyze or store genetic data.

- Clinical Impact:

- AI is used to analyze and sequence newborn genomic data, often before clinical necessity is clear

- Data Integrity and Bias:

- Risk of surveillance/discrimination and long-term data misuse

- Disproportionately impacts marginalized families when dealing with law enforcement

- Transparency and Explainability:

- Consent infrastructure is heavily lacking as parents are not aware of how data is stored and used

- Trust:

- Law enforcement used newborn databases without parental consent, raising questions about genetic surveillance

The use of this data in ways unrelated to care highlights the risks of integrating AI with genetic data systems without strict laws defining privacy. No enforceable protections leaves data at risk for potentially being misused (Grant, 2023).

- AI in Polygenic Risk Scores (Fritzsche et al., 2023):

- Summary: Explores how AI is used to generate polygenic risk scores, an estimate of disease susceptibility based on genomic variants that appear in the genome.

- Clinical Impact:

- Unclear predictive utility, especially across diverse populations.

- Often used in research or early-stage screening, not validated for treatment.

- Data Integrity and Bias:

- Strong Eurocentric bias in training data.

- Risk of inaccurate results for non-European ancestry groups.

- Transparency and Explainability:

- Minimal explainability of how PRS was described and how the algorithms work

- Leads to inability to interpret the score correctly

- Trust:

- Informed consent issues and beneficence vs maleficence

If training data, performance metrics and regulatory standards are not inclusive or transparent, AI in genomic medicine can worsen health disparities and provide misinformation to patients and clinicians (Fritzsche et al., 2023).

- AI Predicting Race from Imaging (Gichoya et al., 2022):

- Summary: A modeling study demonstrating how AI can accurately detect a patient’s race from medical images, something that a human physician cannot do.

- Clinical Impact:

- AI can accurately predict self-reported race from medical imaging, even if it is degraded — not even human physicians can do this

- Data Integrity and Bias:

- Raises concerns about the unintentional coding of data and bias replication that is exacerbated by all AI tools

- Transparency and Explainability:

- Highlights how models perform well in certain aspects of analysis, but its developers cannot explain how it comes to these conclusions

- Trust:

- Could enable and exacerbate subtle profiling and healthcare discrimination

While AI performance may seem accurate and objective, this case reminds us that pattern recognition comes with risks of mislabeling images. This is why actively addressing biases and updating algorithms is important to ensure that models do not unintentionally internalize human social constructs, to avoid accidentally creating a race-based model (Gichoya et al., 2022).

Conversely, the next case is a positive example of how AI has been integrated into clinical workflows to help physicians in detecting and analyzing a stroke in CT imaging. This is a model from which other AI healthtech developers should draw inspiration from when building and deploying their products.

- RapidAI for Stroke Detection (“RapidAI Review,” 2024):

- Summary: A study analyzing the accuracy of RapidAI, a model that analyzes strokes through CT imaging

- Clinical Impact:

- Strong evidence for sensitivity in detecting stroke, especially LVO and ICH.

- Reduced diagnostic time and supports time-sensitive decisions.

- Transparency and Explainability:

- Easily interpretable outputs

- Accessibility:

- Limited by high cost and dependence on high-resolution imaging tech.

- Access varies by institution and region.

RapidAI is a positive example of how AI can be used in an effective, efficient and trustworthy manner. By maintaining a clinical outcomes first mentality and validating through external research, this technology has been well received and integrated into many hospital systems as a tool, not a replacement, for a physician. However, despite its strong technical performance, RapidAI is expensive, and therefore is limited in access to well-funded or private hospitals (“RapidAI Review,” 2024).

DISCUSSION

The criteria adapted from the California Initiative to Advance Precision Medicine provides a strong foundation for evaluating AI usage in healthcare (Precision Medicine: An Action Plan for California, n.d.). But, from the pitfalls and strengths of these five cases, three main questions emerge: Who benefits from AI and who controls it? What makes AI trustworthy? Can AI advance equity or simply reproduce harmful biases?

AI must be designed and governed with clear guidelines for consent, governmental and hospital institutional oversight and accountability to patients. Our current legal practices fall short in protecting patients from company interests, allowing unclear consent structures to proliferate and failing to establish guidelines of how law enforcement can access patient health data. This begs the question, who should be held responsible when something goes wrong?

In the Sepsis Prediction model, the opacity of the model caused clinicians to be unable to understand the reasoning behind the decisions that the algorithm has made, and made it more difficult to use as a tool. The external validation methods were not robust enough to ensure accurate predictions, causing the model to misdiagnose patients (Wong et al., 2021). Medicine is complex, and requires multiple tools and data points to be used to make a healthcare decision.

In the ACLU article, the writers pointed out many examples of data misuse. Law enforcement have been using their power to attempt to use newborn genomic data which was initially collected for care for their own purposes (Grant, 2023). This situation typically takes place without parental knowledge or consent, showing how we need clear safeguards for our data.

However, RapidAI uses a better model, allowing the numbers to help physicians make decisions rather than making the recommendation or the decision itself (“RapidAI Review,” 2024). Physicians are respected rather than replaced by AI, working alongside the technology to make quicker and even more well-informed decisions. In this case, a preferred result would be to allow the AI model to help the interpreter make a more accurate guess rather than giving a concrete prediction and using these models as tools rather than the truth.

Trustworthy AI is equally as important, allowing each algorithm or product to be tested multiple times and easily interpretable. Across these cases, trust can be seen in the quality of clinical outcomes as well as algorithmic explainability.

As described in the article about PRS, the concept of the “black box” becomes important, where algorithmic conclusions cannot be explained, not even by their developers. Unclear clinical relevance and the use of these tools without reasoning can cause trust to deteriorate, affecting how both patients and clinicians see them (Fritzsche et al., 2023). For patients, this leads to even more uncertainty and mistrust in the medical industry. For clinicians, it leads to confusion as to how to proceed with the care plan, especially if they receive contradictory information from other methods of testing.

In the article about the AI race detection model, the model was able to accurately predict a patient’s self-reported race from medical imaging, a feat that human physicians cannot even accomplish. The algorithm was able to find race, but unable to explain or provide any reasoning as to how it was able to come to such a conclusion (Gichoya et al., 2022). There was no justification, interpretation or roadmap of how this was found.

In both cases, the inability to explain how, or decode the black box, make the AI tools less legitimate or trustworthy. This is where RapidAI shines, and the design and deployment strategy prioritizes trust in this product. There was rigorous validation, physicians are aware of how the model crunches numbers and the clinical outcomes are accurate (“RapidAI Review,” 2024). This tool continues to function as a tool, and not a replacement for a physician, allowing all persons affected by the tool to understand what it is doing, how it works and what it does to benefit others. Healthcare and healthtech now all rely on these numbers to help inform stroke decisions and explain why this tool is so helpful.

The third category, equity, is heavily represented in the various criteria derived from CIAPM’s paper and one of the most important principles to consider when implementing AI tools in healthcare. The criteria addresses data quality and access, and in this section, we will also analyze how bias is perpetuated by AI that is trained on poorly curated.

In the study on the PRS tool, one conclusion drawn was that the data that was fed to the algorithm was mostly that of European descendants, making the final conclusions less meaningful and inaccurate for people of other racial or ethnic backgrounds (Fritzsche et al., 2023). This issue stems from societal biases, showing that the genomes and health of Europeans is considered the standard at the expense of other people. Coupled with the study that showed how AI can predict race from medical imaging, to the surprise of its developers, this is cause for deep concern (Gichoya et al., 2022). If AI can detect these biases and socially constructed categories from imaging data, then these categories may be unintentionally coded into other clinical models. AI models are a product of human biases, and perpetuates these through its algorithmic pattern recognition.

Even RapidAI, the only positive case study, raises equity concerns. This equipment is technically sound and equitable across patients, yet very expensive (“RapidAI Review,” 2024). Wealthier institutions are the only facilities that have access to this infrastructure, while hospitals with less revenue are unable to invest in this kind of equipment. Equity of performance in this case, does not correlate to equity of implementation.

To be able to implement these technologies, AI tools must be made transparent, including data justice, informed consent and proper resource distribution. We must consider how AI works, for whom, and at what cost.

RECOMMENDATIONS & CONCLUSIONS

These cases show that the earlier criteria was a good foundation for analyzing AI tools for implementation in healthcare. However, looking at the big picture by understanding how AI may continue to perpetuate dynamics in our society, the need for clear governance and trust in technology, allows patients to be as protected as they can be. Even promising AI tools run the risk of reinforcing more disparities by maintaining a cost-barrier or an accuracy barrier for people not of European descent.

AI in healthcare must be treated as a social intervention rather than just a tool to be deployed. With this technology, we have the opportunity to lay groundwork for a more equitable healthcare industry.

References

- Beauchesne, O. H., SCImago Lab, Elsevier Scopus, Milstein, A., Meyers, F. J., Sakul, H., Gellert, J., Mega, J., Mesirov, J. P., Carpten, J., Goodwin, K., Suennen, L., Maxon, M. E., Milken, M., Lizerbram, S., Lockhart, S. H., Aragón, T. J., Lajonchere, C., Moore, G., . . . Duron, Y. (2018). Precision Medicine: an Action Plan for California (By K. Martin, A. Butte, & E. G. Brown). https://lci.ca.gov/ciapm/docs/reports/20190107-Precision_Medicine_An_Action_Plan_for_California.pdf

- Grant, C. (2023, November 21). Widespread newborn DNA sequencing will worsen risks to genetic privacy | ACLU. American Civil Liberties Union. https://www.aclu.org/news/privacy-technology/widespread-newborn-dna-sequencing-will-worsen-risks-to-genetic-privacy

- Wong, A., Otles, E., Donnelly, J. P., Krumm, A., McCullough, J., DeTroyer-Cooley, O., Pestrue, J., Phillips, M., Konye, J., Penoza, C., Ghous, M., & Singh, K. (2021). External validation of a widely implemented proprietary sepsis prediction model in hospitalized patients. JAMA Internal Medicine. https://doi.org/10.1001/jamainternmed.2021.2626

- Fritzsche, M., Akyüz, K., Abadía, M. C., McLennan, S., Marttinen, P., Mayrhofer, M. T., & Buyx, A. M. (2023). Ethical layering in AI-driven polygenic risk scores—New complexities, new challenges. Frontiers in Genetics, 14. https://doi.org/10.3389/fgene.2023.1098439

- Gichoya, J. W., Banerjee, I., Bhimireddy, A. R., Burns, J. L., Celi, L. A., Chen, L., Correa, R., Dullerud, N., Ghassemi, M., Huang, S., Kuo, P., Lungren, M. P., Palmer, L. J., Price, B. J., Purkayastha, S., Pyrros, A. T., Oakden-Rayner, L., Okechukwu, C., Seyyed-Kalantari, L., . . . Zhang, H. (2022). AI recognition of patient race in medical imaging: a modelling study. The Lancet Digital Health, 4(6), e406–e414. https://doi.org/10.1016/s2589-7500(22)00063-2

- Canadian Agency for Drugs and Technologies in Health. (2024, November 1). RapidAI Review. RapidAI for Stroke Detection: Main Report – NCBI Bookshelf. https://www.ncbi.nlm.nih.gov/books/NBK610423/